Extract Transform Load testing — most commonly referred to as ETL testing — is a critical tool in the world of modern business intelligence and data analytics.

Teams must collect data from disparate sources so that they can store them in data warehouses or prepare them for their business intelligence tools to assist in quality decision-making or insights. ETL testing helps ensure the processes, data, and insights are up to scratch and ready to support the business.

Let’s explore what Extract Transform Load testing is and how it works before sharing some of the different approaches and tools you can use for ETL testing.

What is Extract-Transform-Load,

and how does it work?

Extract-Transform-Load (ETL) is a crucial concept in data warehousing and analytics. In effect, ETL describes the process of collecting data from multiple sources and centralizing it in a data warehouse or data lake.

Let’s break down the ETL process into its constituent parts so you can understand it more clearly.

1. Extract:

Data is extracted from various sources. These sources could be an existing database, an ERP or CRM application, spreadsheets, web services, or different files.

2. Transform:

Once the data is extracted, you must transform it so that it’s suitable for storage or analysis. The process might involve cleaning and normalizing the data and converting it into an appropriate format.

3. Load:

The last part of the process consists of loading data into the target system. This target system could be a data warehouse, data lake, or other repository.

While ETL has been around since the 1970s, it has taken on increased importance recently due to the business communities’ broader reliance on cloud-based systems, real-time data, analytics, and ML/AI tools.

What is ETL testing?

ETL testing is a type of data processing testing that verifies that data gathered from one source has been accurately transmitted to its destination. As you’ll read above, once the data is extracted, it must be transformed according to business requirements. This transformation can occasionally lead to issues with the data. An ETL testing approach helps ensure the data is reliable and accurate.

ETL testing is a kind of black box testing because it validates the exchange, transform, and load process by comparing inputs with outputs. In effect, it focuses on what the system does in response to different inputs rather than how it achieves those results. However, in certain situations, testers will look at what is happening inside the box, especially when unexpected scenarios occur.

How does extract transform

load testing work?

The easiest way to explain how ETL testing works is to split it into its constituent parts: extract, transform, and load. From there, you can understand the different elements of ETL validation before we break down the stages more granularly.

1. Extract

ETL testing validates that the data pulled from the source is accurate and error-free. This process involves checking basic value accuracy and ensuring that data is complete.

Another part of the process involves data profiling. This process effectively consists of understanding the source data’s structure, content, and quality. The idea here is that you can unearth any anomalies, inconsistencies, or potential mapping issues.

2. Transform

The next part of the process explores strict adherence to data transformation rules. One of the main approaches here involves testing transformation logic against regulations, laws, and other business rules.

Some of the typical tests here involve checking if data converts into expected formats, whether calculations are accurate, and verifying if lookups link elements between datasets.

Data quality also comes under consideration. Testers must find and remove formatting inconsistencies and duplicates and resolve any conflicting data while applying data cleansing processes.

Finally, overall performance is also tested to find out how the ETL process is affected by large volumes of data.

3. Load

Finally, when data is loaded into the data warehouse, data lake, or other final target, testers must verify if it is complete, accurate, and presented in the correct format.

Comparisons are run to check that no data has been lost or corrupted on the path between the source, staging area, and targets.

Finally, audit trails are examined to track that the process is tracking any changes that occur during the ETL process and verify if history and metadata are present.

This above section should give you a basic oversight of how the ETL data quality checks are performed. You’ll note that tests occur at each stage of data transmission because it’s the best way to identify and resolve particular issues.

However, for a deeper understanding of ETL testing concepts, you must explore the different types of ETL testing and the stages where they are applied. The following two sections will provide this information and help give you the complete picture you need.

Different types of ETL testing

There are lots of different types of validation in ETL testing. They are used in different scenarios and for a wide range of aims. Let’s explore the types of ETL testing and where and when you should use them.

1. Source Data Validation Testing

Importance:

Source data validation testing ensures that the source data is high-quality and consistent before it is extracted for transformation.

What it checks:

- Does data adhere to business rules?

- Do data types and formats match expectations?

- Does data fall within valid ranges?

- Are there null or missing values in unexpected places?

2. Source to Target Data Reconciliation Testing

Importance:

This type of testing validates whether all data from a particular source is extracted, transformed, and loaded into the target system.

What it checks:

- Was data lost during the ETL process?

- Was data duplicated during the ETL process?

3. Data Transformation Testing

Importance:

Data transformations can involve a lot of different things, like format changes, calculations, aggregations, and so on. Data transformation testing checks whether the transformations have happened as intended.

What it checks:

- Is the data as expected after transformations?

- Has the business logic been implemented properly during transformations?

- Have calculations performed during transformation produced the correct output?

4. Data Validation Testing

Importance:

Tests whether the final data conforms to business requirements having been transformed.

What it checks:

- Are data quality standards (i.e., accuracy, completeness) being met?

- Are business rules being followed?

5. ETL Referential Integrity Testing

Importance:

Validates that relationships between tables in the source data have been faithfully reproduced in the target data.

What it checks:

- Do foreign keys in the data match their corresponding primary keys?

- Are child and parent table relationships maintained after ETL?

6. Integration Testing

Importance:

Integration tests validate whether the ETL process integrates and functions within the larger data ecosystem.

What it checks:

- Do the end-to-end data flows work smoothly?

- How well does the ETL process interact with the other systems, such as the source, target, or other downstream applications that rely on the data?

7. Performance Testing

Importance:

ETL performance testing evaluates how efficient the ETL process is when put under duress, such as heavy load.

What it checks:

- Does ETL processing time meet business requirements or benchmarks?

- Can the ETL process scale in response to increasing data volumes?

- Does the ETL process have any resource constraints or bottlenecks that must be addressed?

8. Functional Testing

Importance:

Functional testing validates whether the ETL process meets project requirements from the user’s perspective.

What it checks:

- Do outputs align with stated business requirements?

- Do reports generate accurate results?

- Do dashboards show expected data?

9. Regression testing

Importance:

ETL processes are highly complex, with a lot of interrelated data. Even small changes in the process can affect output at the source. Regression testing is vital for identifying these unexpected outcomes.

What it checks:

- Are changes in code or underlying data suddenly causing adverse effects?

- Have changes had the desired effect on improving the ETL process?

It’s worth noting that we could include Unit Testing on this list. However, instead, we have included the constituent parts that Unit Testing would cover, such as Source Validation Testing, Source to Target Data Reconciliation Testing, and so on.

8 stages of ETL testing with

8 expert tips for success

OK, now that you understand the different types of validation in ETL testing, it’s time to put it all together. ETL testing is commonly carried out with a multistage approach, which we will present below.

#1. Gathering business requirements

The first stage of any testing process involves gathering requirements. Testers must have a consensus about what the ETL process is meant to deliver. Some questions that should be answered at this early stage are things like:

- How will the data be used?

- What output formats are required?

- What are the performance expectations?

- What regulations, laws, or company policies govern the use of the data?

Expert tip:

While adhering to requirements is a must, ETL testers should use their knowledge and expertise to proactively look for potential issues, inconsistencies, or errors early in the process. It’s much easier and far less time-consuming to identify and eliminate problems early.

#2. Identifying and validating data sources

ETL is about pulling data from disparate data sources, such as ERP or CRM tools, applications, other databases, spreadsheets, and so on. Testers must confirm that the required data is accessible, is structured correctly, and has enough quality for use as intended.

Expert tip:

Source data in real-world systems is typically messy. Producing thorough data profiling reports is key at this stage to ensure you identify missing values, format issues, anomalies, and other inconsistencies that you want to keep out of transformation logic down the line.

#3. Write test cases

With business requirements and data profiling reports in hand, it’s time to build the test cases you need to verify the ETL process. Test cases should include functional tests, as well as edge cases and any areas you have identified as carrying a high risk of failure.

Expert tip:

Testing single transformations is good, but building test cases that understand how data is affected as it is transmitted through the entire ETL pipeline is better.

#4. Executing test cases

Now it’s time to apply your test cases. Testers should do their best to simulate real conditions or, where possible, use real conditions.

Expert tip:

ETL automation testing tools are essential here. Being able to produce consistent and reproducible tests saves a huge amount of time and effort. What’s more, ETL testing is a constant requirement as data sources are updated or changes are made to the ETL process itself.

#5. Generate reports

Once you’ve executed your tests, you must faithfully document your findings. Note down your results, and include:

- Successes

- Failures

- Deviations from expectation

- What fixes or changes must be made

These reports will do much more than just confirm the health of your system. They’ll also provide the schedule for any fixes you need to make while providing vital information that is required to optimize the ETL process.

Expert tip:

Reports are for everyone, including non-technical stakeholders. Strive to reduce jargon and overly technical concepts and use visual summaries like graphs, charts, and more to explain the process.

#6. Re-testing for bugs and defects

Next up, you need to check that bugs and defects detected during test execution have been resolved. Additionally, you should confirm that any changes implemented during this process have not spawned new issues.

Expert tip:

Regression testing is crucial at this stage because the ETL process is complex and interlinked. One fix can result in unintended and entirely unexpected consequences across the ETL process.

#7. Final reports

Final reports provide a detailed summary of the ETL testing process. Highlight areas of success and any areas that require further work. Finally, deliver an overall verdict on the quality and reliability of the ETL data.

Expert tip:

Your final report is not just record keeping. Well-written and well-structured test reports will become part of the production documentation and help ensure that the ETL process is constantly improved and optimized.

#8. Closing the Reports

Finally, once the reports have been delivered and understood by the various relevant stakeholders, they must be formally accepted. Reports should communicate a clear plan for any items that must be resolved or further actions that must be taken.

Expert tip:

While closing the reports is a strong sign that the ETL process has reached an acceptable level, you must remember that this work is never really done. Continuous improvement and response to changes in source data, hardware, or even evolving business rules mean that any acceptance is just a milestone in an ongoing process.

Extract transform load testing benefits

A comprehensive ETL testing process is essential for teams and products that rely on data analytics. Let’s take a look at the benefits you can unlock when you commit to an ETL testing approach.

1. Data accuracy and integrity

The core concept of ETL validation is ensuring you get clean and reliable data into your data warehouse. The right ETL testing approach means:

- You don’t lose data during the extraction

- Your transformations don’t contain errors

- Data gets to the target system as you intended.

2. Saving time and money

Data warehouse ETL testing is important because it catches errors early. It’s far more desirable to identify and eliminate data problems early than it is to fix problems when the horse has bolted from the stable. Per Gartner, bad-quality data costs teams an average of $13 million each year. Start ETL testing early, and you’ll save time and money.

3. Performance

Bad ETL processes can hinder your data systems and reduce the quality of your analytics, reporting, and decision-making. A good ETL testing process helps keep you on track by identifying data bottlenecks and other areas that need improvement.

4. Compliance

There are strict data governance rules for financial institutions and healthcare providers. Failure to handle and manage data properly can lead to revoked licenses or heavy fines. ETL testing helps ensure you stay within the bounds of compliance and protect sensitive information.

5. Better decision-making

The more accurate and reliable your data is, the more confident you can be about data-driven decisions. ETL testing ensures you can count on the content in your data warehouse to deliver the insights you need to make the right steps.

Challenges associated with ETL testing

Ensuring the health of your data pipeline is essential, but it comes with some complexities. Let’s explore the challenges related to solid ETL data quality checks.

1. Data volume and complexity

A good ETL testing process means dealing with large volumes of different types of data, varying from structured to unstructured. This variation of data can quickly become complex and difficult to manage.

2. Source system dependency

As we’ve outlined above, ETL testing is about ensuring a smooth source-to-target pipeline. However, the quality of output is heavily reliant on the quality of input. Changes in the source output schema, format, or quality can cause ETL test failures that aren’t always easy to diagnose.

3. Transformation complexities

Building the logic for data transformations is a specialist undertaking. Applying business rules and cleaning or reformatting data is complex, and verifying the quality of these transformations is not always easy.

4. Shifting requirements

All testers know the pain of quickly evolving business requirements. The ETL process is a dynamic space, and so too is ETL testing. As business roles are updated and changed, testers must adapt test cases and ensure database performance is optimized.

5. Test environment limitations

Running a full-scale production environment for ETL testing is complex and expensive. However, smaller-scale test environments won’t always provide true validation because they don’t replicate the way handling huge data volumes can result in performance bottlenecks.

ETL tips and best practices

ETL testing takes time to master. Here are some tips to help you on your way.

#1. Continuous testing

ETL testing is not a one-and-done thing. It’s an outlook to ensure good quality data that you must perform and monitor continuously. An ETL QA tester is a full-time job at firms that rely on business intelligence tools for a reason.

#2. No ETL tester is an island

While ETL testing takes a black-box approach, ETL QA engineers should work with stakeholders, database admins, and the developers who build the ETL logic if they want to design meaningful tests that truly validate the ETL process.

#3. Solid documentation is critical.

Sound and detailed documentation, including source-to-target mappings and a record of data lineage, are vital for pinpointing where errors in the data pipeline have emerged.

#4. Automate as much as possible

This is perhaps the most important point. Comprehensive ETL testing is resource-intensive. It’s also an ongoing process, which means it requires a lot of manual effort at regular intervals. As such, ETL testing is a perfect job for test automation software and RPA tools.

The best ETL automation testing tools

It should be clear by now that ETL automation testing holds a significant advantage for testing teams in terms of getting the most from your resources.

Thankfully, there are several quality ETL testing tools on the market. Each tool has its own pros and cons, with features and functionality that will suit varied requirements.

Deciding on the right tool depends on a few different factors, including:

- Complexity of your ETL process and business logic

- The volume of data you are transmitting

- Presence or concentration of unstructured data in your ETL process

- Technical competence and skill sets of your testers

- Your budget.

Let’s take a look at the top 5 ETL testing tools.

#5. QuerySurge

QuerySurge is a subscription-based ETL testing tool with an emphasis on continuous testing. It supports source and target database combinations, offers strong automation capabilities, and is built for large, complex data warehousing needs.

The user interface is a pleasure to use, and its reporting capabilities are excellent. However, some users have bemoaned QuerySurge’s expensive and opaque pricing, while others have criticized its lack of user-friendliness and steep learning curve for inexperienced users.

#4. iCEDQ

iCEDQ is a quality tool for data testing and data quality monitoring. It offers rule-based testing and interesting ML-assisted error detection. Tracking, reporting, and visualization are particularly strong suits for iCEDQ, making it a good tool for firms with critical data compliance and regulatory needs.

That said, implementing the tool into complex ETL landscapes is one of iCEDQ’s most notable drawbacks. In addition, the user interface is pretty complex and won’t suit less technical teams.

#3. RightData

RightData is a user-friendly tool that boasts strong no-code capabilities for both ETL testing and data validation. The tool is super flexible and works across different databases and cloud data warehouses. With a range of pre-built test templates, superb visualization capabilities, and seamless integration with workflow tools, it’s clear to see why RightData has gained popularity in recent years.

However, while RightData has many desirable characteristics, it can be expensive if you need to test a lot of ETL processes. While it is subscription-based, prices can quickly escalate with high levels of data usage and additional features. When compared to ZAPTEST’s predictable flat pricing model and unlimited licenses, RightData’s approach seems to penalize growing or scaling companies.

#2. BiG EVAL

BiG EVAL is a great choice for complex ETL systems and legacy warehouse implementations. It uses rule-based data validation and has powerful data profiling capabilities, which makes it a good choice for ETL testing. BiG EVAL also provides users with great automation options for designing and scheduling tests, and when combined with excellent reporting and visualization abilities, it’s up there with the most comprehensive tools for ETL testing.

That said, implementing BiG EVAL is an even bigger job. When compared to no-code tools like ZAPTEST, the interface can seem a bit old school. It’s important to note that ETL testing is just one of BiG EVAL’s use cases, so its license-based pricing might prove prohibitive for some teams if you are paying for features and functions you don’t strictly need.

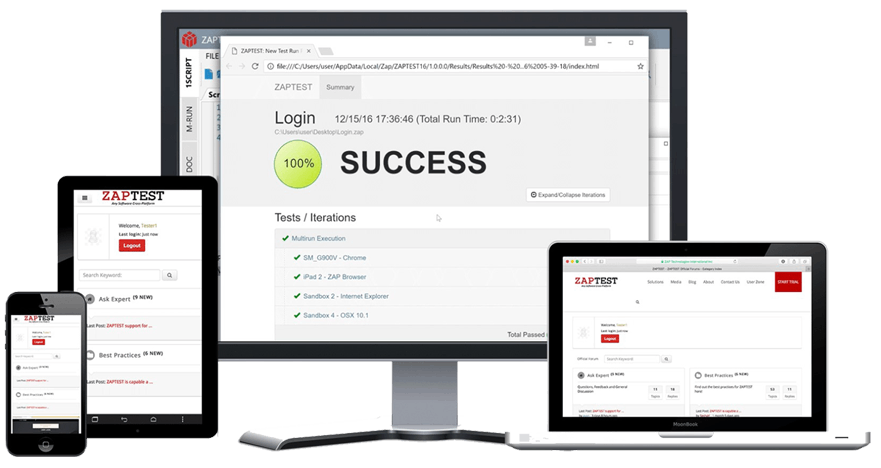

#1. ZAPTEST: The no.1 choice for ETL testing

While ZAPTEST is not a dedicated ETL testing tool, it offers the flexibility and scalability to help with several of the tasks that compose a thorough ETL testing approach.

As you can see from the Different types of ETL testing section above, testing the ETL process requires data validation, integration, performance, functionality, and regression testing. ZAPTEST can do all of this and more. Our tools End-to-End Testing and Metadata testing capabilities are key features for ensuring your analytics and business intelligence are up to scratch and ready to deliver results and value.

ZAPTEST also comes with one of the best RPA tools on the market. In the context of ETL testing, RPA can provide serious value by generating realistic test data, automating repetitive manual tasks, and helping you introduce the continuous testing you need for a rock-solid ETL process.

With ZAPTET’s no-code capabilities, lightning-fast test creation, and seamless integration with other enterprise tools, it’s a one-stop-shop for automated ETL testing and much, much more.

Final thoughts

Extract transform load testing is like establishing a quality control department for your data warehouse. It’s not just concerned with whether data has been transferred from source to target; it’s also about ensuring that it has arrived intact and as expected.

When it comes to the crunch, if you have bad data, you’ll end up making misinformed decisions. Proper ETL testing is an investment in the integrity of your entire data ecosystem. However, for many businesses, the time and expense involved in ETL testing is something they struggle to afford.

Automation of ETL testing helps you test quicker and more efficiently while saving money over the long term. Increasing test coverage and regression testing capabilities can help boost your data integrity because you can test at a far higher frequency than if you were stuck with manual testing.

What’s more, using ETL automation testing tools reduces human error while freeing up testers for more creative or value-driven tasks. Embracing test automation and RPA tools like ZAPTEST is one decision that you won’t need to run through your business intelligence tools.