Ad-hoc testing is a type of software testing that developers and software companies implement when checking the software’s current iteration. This form of testing gives a greater level of insight into the program, locating issues that conventional testing might be unable to highlight.

It’s paramount that testing teams have a complete understanding of the ad hoc testing process so they know how to circumvent its challenges and make sure that the team can successfully implement this technique.

Knowing exactly how ad-hoc testing works, and which tools can facilitate its implementation, allows a business to continuously enhance its own quality assurance procedures. The formal testing process follows very specific rules, which could result in the team missing certain bugs – ad-hoc checks can circumvent these blind spots and quickly test every software feature.

In this article, we closely examine ad-hoc testing and how you can use it to your advantage when developing a software product.

Ad-Hoc Testing Meaning: What is Ad-Hoc Testing?

Ad-hoc testing is a quality assurance process that avoids formal rules and documentation – helping testers find errors in their application that conventional approaches can’t identify. This typically requires comprehensive knowledge of the software before testing starts – including an understanding of the program’s inner workings. These ad-hoc checks aim to break the application in ways that reflect user input, accounting for various potential situations so the developers can patch any existing issues.

The lack of documentation is central to this technique, which includes no checklist or test cases to guide testers across an application’s features. Ad-hoc testing is entirely about testing the software in whichever way a team decides is effective at that specific moment. This might take pre-existing formal tests into account, but could also simply involve conducting as many tests as possible in the (likely limited) time that is allotted for this technique.

1. When and why do you need to do Ad-Hoc Testing in software testing?

The main reason companies conduct ad-hoc testing is because of its ability to uncover errors that traditional approaches cannot find. This could be for any number of reasons, such as conventional test cases following an especially standardised process that cannot account for an application’s idiosyncrasies.

Each testing type can offer new perspectives and interesting approaches to quality assurance – this also shows issues with the usual test strategy. For example, if ad-hoc testing can identify a concern that the team’s test cases do not address, this suggests they could benefit from recalibrating their testing methodology.

Testers can conduct ad-hoc checks at any point in the testing process. This typically serves as a complement to traditional (and more formal) quality assurance and, with this in mind, testers may perform ad-hoc inspections while their colleagues conduct more formal examinations. However, they may instead prefer to save ad-hoc checks until after the formal testing process as a follow-up that specifically targets potential blind spots.

Ad-hoc testing could also be useful when time is especially limited due to the lack of documentation – the right time depends upon the company and its preferred approach.

2. When you don’t need to do Ad-Hoc Testing

If there isn’t adequate time to perform both ad-hoc and formal testing, it’s important that the team prioritises the latter as this ensures substantial test coverage – even if some gaps still exist.

If the team’s formal tests find bugs that require fixing, it’s generally better to wait until after the developers complete the necessary changes to enact ad-hoc checks. Otherwise, the results they deliver could soon become outdated, especially if the tests relate to the component already experiencing bugs.

In addition to this, ad-hoc testing must happen before the beta testing stage.

3. Who is involved in Ad-Hoc Testing?

There are several key roles involved in the Ad-Hoc testing process, including:

• Software testers are the main team members who conduct ad hoc checks. If enacting buddy or pair testing, then several of these testers will work together on the same components.

• Developers may independently use these checks before the formal quality assurance stage to quickly inspect their own software, though this is in less depth than dedicated ad-hoc testing.

• Team or department leaders authorise the overall testing strategy – helping the testers determine when to begin ad-hoc testing and how to perform it without disrupting other checks.

Benefits of Ad-Hoc Testing

The advantages of ad-hoc testing in software testing include:

1. Quick resolutions

As these tests don’t involve frequent documentation before, during, or after the checks, it’s possible for teams to identify issues much more quickly. This simplicity offers tremendous freedom to testers.

For example, if they test a component and cannot identify any errors, the team may simply move on to the next test without noting this in a document.

2. Complements other testing types

No testing strategy is perfect, and 100% coverage is usually impossible to achieve – even with a comprehensive schedule. There will always be gaps in conventional testing so it’s important that companies integrate multiple approaches.

Ad-hoc testing specifically aims to find the issues which formal testing cannot cover –guaranteeing broader overall test coverage.

3. Flexible execution

Ad-hoc testing can happen at any point in the quality assurance process before beta testing, letting companies and teams decide when is best to execute these checks. They might choose to perform ad-hoc tests in tandem with conventional testing or can wait until afterward – no matter what, the team benefits from the choices at their disposal.

4. Greater collaboration

Developers are more involved with this process than many other forms of testing – especially if the company is using buddy and pair testing.

As a result, the developers get better insight into their own applications and might be able to address bugs to a higher standard. This helps improve the software’s overall quality even further.

5. Diverse perspectives

Ad-hoc testing can show the application from new angles, helping testers to engage with these features in new ways. Additional perspectives are critical throughout testing as formal checks often have at least minor gaps.

If ad-hoc testers use the software with the specific intent of breaking it, they’ll be able to pinpoint the program’s limits more easily.

Challenges of Ad-Hoc Testing

The ad-hoc testing process also has several challenges, such as:

1. Difficulty with reporting

The lack of documentation makes ad-hoc testing much faster but can also make reporting difficult for anything other than a major issue.

For example, one previously-conducted check might become more relevant at a later date despite not initially leading to significant results. Without comprehensive documentation, the team might not be able to explain these tests.

2. Less repeatable

Along similar lines, the testers might not be fully aware of the exact condition necessary to cause the reactions they observe. For example, an ad-hoc check that returns an error may not have sufficient information for the team to take action. They might be unaware of how to repeat this test and get the same result.

3. Requires software experience

As speed is key throughout ad-hoc testing and it usually involves trying to break the application, it’s important that these testers have an intimate understanding of this program.

Knowing how it works allows the testers to break and manipulate the software in more ways, but this could significantly increase the skill demands for ad-hoc testing.

4. Limited accountability

A lack of documentation can cause more issues than just poor reporting; it can also inadvertently lengthen the testing process, impacting the usefulness of quick individual ad-hoc tests.

Testers can struggle to keep track of their progress without sufficient documentation throughout each stage. This may even lead to them repeating a check that other testers already completed.

5. May not reflect the user experience

The aim of virtually every testing type is to account for errors that affect end users in some way. Ad-hoc testing relies primarily on an experienced tester trying to emulate an inexperienced user and this should be consistent across each check–up to and including their attempts to break the application.

Characteristics of Ad-Hoc Tests

The main characteristics of successful ad-hoc tests include:

1. Investigative

The main priority of ad-hoc testing is to identify errors with the application using techniques that conventional checks do not account for. Ad-hoc examinations scour this software for the express purpose of finding holes in the team’s testing procedure, including the coverage of their test cases.

2. Unstructured

Ad hoc checks usually have no set plan beyond conducting as many tests as possible outside the typical bounds of formal quality assurance. Testers will typically group the checks by component for convenience but even this isn’t necessary – they might even devise the checks while performing them.

3. Experience-driven

Ad-hoc testers use their pre-existing software experience to assess which tests would deliver the most benefits and address common blind spots in formal testing.

Though the testing process is still fully unstructured, testers apply their knowledge of previous ad-hoc checks among others while deciding their strategy.

4. Wide-ranging

There are no exact guides to which checks the team should run during ad-hoc testing, but they typically cover a range of components – possibly with more focus on the application’s more sensitive aspects. This helps testers guarantee their examinations are able to fully complement formal testing.

What do we test in Ad-Hoc Tests?

Quality assurance teams usually test the following during ad-hoc testing:

1. Software Quality

These checks aim to identify errors in the application that conventional testing cannot uncover; this means the process mainly tests the application’s general health.

The more bugs which ad-hoc testing can locate, the more improvements developers can implement before their deadline.

2. Test cases

Ad-hoc testing generally doesn’t implement test cases – and this is specifically so the team can investigate how effective they are at providing ample coverage. The test cases are likely inadequate if ad-hoc checks can find errors that conventional testing processes cannot.

3. Testing staff

The goal could also be to check the testing team’s skills and knowledge, even if the test cases are adequate. For example, their methodology of implementing the cases may be insufficient and ad-hoc testing might be critical for addressing the resultant gaps in test coverage.

4. Software limits

Ad-hoc testing also seeks to understand the application’s limits – such as how it responds to unexpected inputs or high system loads. The testers could be specifically investigating the program’s error messages and how well this application performs when under significant pressure.

Clearing up some confusion:

Ad-Hoc Testing and Exploratory Testing

Some people consider ad-hoc and exploratory testing to be synonymous, though the truth is more complicated than this.

1. What is Exploratory Testing?

Exploratory testing refers to quality assurance procedures that investigate the software from a holistic point of view and specifically combine the discovery and test processes into a single method. This is typically a middle-ground between fully structured testing and entirely free-form ad-hoc checks.

Exploratory testing works best in specific scenarios, such as when rapid feedback is necessary or if the team must address edge cases. This type of testing usually reaches its full potential when the team uses scripted testing alongside it.

2. Differences between Exploratory Testing

and Ad-Hoc Testing

The biggest distinction between ad-hoc and exploratory testing is the former’s use of documentation to record and facilitate its checks, while ad-hoc testing avoids this entirely. Exploratory testing puts more of an emphasis on test freedom but never to the same level as an ad-hoc approach which is entirely unstructured.

Exploratory testing also involves learning about the application and its inner workings during these checks – ad-hoc testers instead often have a comprehensive knowledge of the software’s functionality before they begin.

Types of Ad-Hoc Tests

There are three main forms of ad-hoc testing in software testing, including:

1. Monkey testing

Perhaps the most popular type of ad-hoc testing, monkey tests are those that involve a team randomly looking at different components.

This typically takes place during the unit testing process and enacts a series of checks without any test cases. The testers independently investigate the data in completely unstructured ways, letting them examine the broader system and its ability to resist intense strain from user inputs.

Observing the output of these unscripted techniques helps the testing team identify errors that other unit tests have missed due to shortcomings in conventional testing methods.

2. Buddy testing

In an ad-hoc context, buddy tests use a minimum of two staff members – typically a tester and a developer – and primarily take place after the unit testing stage. The ‘buddies’ work together on the same module to pinpoint errors. Their diverse skill sets and comprehensive experience make them a more effective team, which helps to alleviate many of the problems that arise due to a lack of documentation.

The developer might even suggest a number of the tests themselves, letting them identify the components that may be in need of more attention.

3. Pair testing

Pair testing is similar in that it involves two staff members but this is usually two separate testers, one of which executes the actual tests while the other takes notes.

Even without formal documentation, note-taking may let the team informally keep track of individual ad-hoc checks. The roles of tester and scribe can switch depending upon the test or the pair might maintain their assigned roles throughout the entire process.

The tester that has more experience is typically the one who performs the actual checks – though they always share the work with one another.

Manual or automated Ad-Hoc Tests?

Automated testing can help teams save even more time throughout the quality assurance stage; which lets the testers fit more checks into their schedule. Even without a definite structure, it is essential that testers work to maximize coverage and automation encourages more in-depth inspections of this software.

Automated ad-hoc checks are generally more accurate than manual tests due to their ability to avoid human error during rote tasks – this is especially helpful when enacting the same tests on different iterations. The success of this procedure usually depends upon the automated testing tool that the team selects and its functionality.

However, automated testing does have certain limitations. For example, the main strength of ad-hoc testing is its ability to emulate user input and enact random checks as the tester comes up with them. These tests could lose their randomness if the organisation’s testing program struggles with complex checks.

The time it takes to automate these highly specific tasks might also limit the typical time savings of this process. It’s important that teams thoroughly investigate the available automation tools to find one that matches their company’s project.

What do you need to start Ad-Hoc Testing?

Here are the main prerequisites of ad-hoc testing:

1. Qualified staff

As ad-hoc tests are quick, random inspections of the software’s inner workings, it typically helps to have testers who are experienced with the software. They should also have a working knowledge of key testing principles – this lets them easily identify the most effective checks.

2. An unstructured approach

Testers must be willing to abandon their usual strategies for ad-hoc testing; this mindset is just as critical as the quality checks themselves. This method can only succeed without structure or documentation and it’s vital that the testers remember this at every stage.

3. Automation software

Though ad-hoc testing relies more on testing random inputs and conditions, automation is still a very effective technique in any context.

For this reason, ad-hoc checks should still implement automated testing tools where possible, as the right application can significantly streamline the process.

4. Other forms of testing

Ad-hoc tests work best alongside other checks that take a more formal approach – helping the team guarantee substantial coverage across the software. It’s vital that the testers mix various techniques, though this could be before, during, or after they complete ad-hoc testing.

Ad-Hoc Testing process

The usual steps that testers should follow when they perform ad-hoc testing in software testing are:

1. Defining ad-hoc test objectives

This stage is limited due to the lack of documentation and structure but it’s still paramount that the team has a clear focus. The testers may start to share vague ideas about which upcoming tests to run and the components to prioritize.

2. Selecting the ad-hoc test team

As the team brainstorms a number of potential ad-hoc checks, they also figure out which testers would be best for this type of testing. They usually select testers who intimately understand the application and may also pair them with a developer.

3. Executing ad-hoc tests

After deciding which testers are right for this stage, these team members begin their checks at an agreed-upon point in testing. Their aim is to perform as many of the ad-hoc checks as possible – which the testers might not devise until this stage.

4. Evaluating the test results

Upon completing the tests (or even between individual checks) the testers will evaluate the results but without formally documenting them in a test case. If they uncover any problems with the application, they record them informally and discuss the team’s next steps.

5. Reporting any discovered bugs

Once they evaluate the results, the testers must inform developers about the errors present in the software so they have ample time to fix them before release.

The testing team also uses the information to determine how to improve their formal test processes.

6. Retesting as necessary

The testing team will likely repeat the ad-hoc process for new iterations of the application to check how well it handles updates. As the testers will have fixed many of the previously-identified gaps in their test cases, future ad-hoc checks might require a different approach.

Best Practices for Ad-Hoc Testing

There are certain practices that testing teams should implement during ad-hoc testing, including:

1. Target potential testing gaps

While ad-hoc testing involves much less planning than other types, the team still aims to address shortcomings in quality assurance. If the ad-hoc testers suspect any specific problems with the team’s test cases, they should prioritise this while conducting their checks.

2. Consider automation software

Automation strategies such as hyperautomation can offer many benefits to companies wanting to conduct ad-hoc tests.

The success of this depends upon several key factors, including the tool that the business chooses, as well as the general complexities of their ad-hoc tests.

3. Take comprehensive notes

The lack of documentation in ad-hoc testing is mainly to streamline this process even further – the team could benefit from making informal notes as they proceed. This gives testers a clear record of these checks and their results, increasing their overall repeatability.

4. Keep refining the tests

Ad-hoc testers continuously refine their approach to account for changes in the team’s testing strategy. When looking at newer versions of the company’s software, for example, they might adjust these checks in response to newer and more inclusive formal test cases.

7 Mistakes & Pitfalls in Implementing

Ad-Hoc Tests

As with any testing process, there is a wide range of potential mistakes which the team should work to avoid, such as:

1. Inexperienced testers

To maintain the expected pace of ad-hoc testing, the team leader must assign testers based on the knowledge and skills they have. While many forms of testing can accommodate entry-level quality assurance staff, ad-hoc checks require team members who fully understand the software; preferably with experience in running these tests.

2. Unfocused checks

Ad-hoc testing can significantly improve test coverage due to its faster pace – the team doesn’t need to fill out extensive documentation before and after each check.

However, the ad-hoc testers must still maintain a strong focus; for example, they might decide to prioritise certain components with a greater risk of failure.

3. No planning

Avoiding any plan whatsoever might limit the effectiveness of ad-hoc testing. Despite the unstructured nature of this approach, it’s important that the team has a rough idea of which tests to run before they begin.

Time is limited during this process and knowing how to proceed can offer many benefits.

4. Overly structured

On the opposite end of the spectrum, this approach typically relies upon a lack of planning as this helps testers actively subvert test cases and find hidden errors.

Ad hoc testing is also known as random testing and forcing a structure onto it might prevent these checks from locating bugs.

5. No long-term changes

The purpose of ad-hoc testing is to identify any weaknesses in the team’s test cases; this examines their overall strategy just as much as the software itself.

However, this means ad-hoc tests are generally only effective if the team uses this information to refine their formal checks over time.

6. Incompatible datasets

Virtually every form of testing requires a form of simulated data to assess how the application responds; some tools let testers automatically populate a program with mock data.

However, this might not reflect how a user would engage with the software – ad hoc checks require datasets the software will likely encounter.

7. Information silos

It’s essential that the testers and developers are in constant communication with each other, even if the latter is not part of the ad-hoc testing process.

This helps everyone understand which tests have been conducted – showing the next actions to take while also preventing the testers from needlessly repeating certain checks.

Types of Outputs from Ad-Hoc Tests

Ad-hoc checks produce several distinct outputs, including:

1. Test results

The individual tests produce different results specific to the exact component and approach involved – this can take many forms.

It’s usually the tester’s responsibility to determine if the results constitute an error, though a lack of documentation makes it difficult to compare this with their expectations. The team passes these results to the developers if they notice any problems.

2. Test logs

The software itself uses a complicated system of internal logs to monitor user inputs and highlight a number of file or database issues that might emerge.

This could point towards an internal error, including the specific part of the software causing the issue. With this information, ad-hoc testers and developers can address the issues that they discover much more easily.

3. Error messages

Many ad-hoc checks specifically aim to break the software and expose its limits, which means the application’s error messages are one of the most common outputs from these tests.

By deliberately causing error messages, the team can showcase what the average end user sees whenever the unexpected actions they take have an adverse effect on the program’s operation.

Ad-Hoc Testing examples

Here are three ad-hoc testing scenarios that show how a team might implement it for different applications:

1. E-commerce web application

If a company wishes to test an ecommerce-based web app, they could use ad-hoc testing – specifically monkey testing – to see how well the platform handles unexpected user interactions.

The testers may aim to push each feature to their limits, such as by adding items to their basket in unrealistic quantities or trying to buy products that are out of stock. They aren’t constrained by the team’s test cases and there are few limits to which checks they could perform; the testers might even try to complete purchases using outdated URLs.

2. Desktop application

Ad-hoc testers can also implement these techniques for desktop applications with a possible focus on different machines and how well they each accommodate the program.

The team members might perform these checks repeatedly to see how changing hardware or software settings affect an application’s overall performance. For example, a specific graphics card may struggle to render the interface.

Alternatively, these testers could simply give their program impossible inputs and see how it responds, such as if it can correctly display error messages which explain the issue adequately to the end user.

3. Mobile application

One way that ad-hoc testers could examine a mobile application is to test its security protocols – they could try to directly access the app’s development tools, for example.

The team may try to see if they’re able to perform unauthorized actions by finding common loopholes and exploits; they could specifically ask staff members with experience in app security to facilitate this.

This might also involve pair testing with the developers due to their insight into the app’s design, letting a tester break the software and show exactly where its security is lacking.

Types of errors and bugs detected

through Ad-Hoc Testing

Ad-hoc checks can uncover many issues with a program, such as:

1. Functionality errors

Using ad-hoc testing to examine an application’s basic features might reveal serious bugs which affect how end users may engage with it.

For example, monkey testing an e-commerce site’s payment options will illustrate the conditions that prevent the transaction.

2. Performance issues

The testers may specifically work to create performance issues in the program – such as by filling the database with various spam inputs.

This could manifest as significant lag time or even general software instability, which will likely lead to a (potentially system-wide) crash.

3. Usability problems

These checks could also highlight faults with the interface and general user experience. The UI of a mobile app, for example, might present differently on another operating system or screen resolution. A poor interface may lead to users struggling to operate this application.

4. Security flaws

The random nature of ad-hoc testing allows it to cover a range of common and rare security concerns; a tester might use these checks to find a program’s administrative backdoors.

Alternatively, their inspection may show that the software has no data encryption.

Common ad-hoc testing metrics

Ad-hoc testing uses various metrics to facilitate its results, including:

1. Defect detection efficiency

This metric looks at how effective the testing process is at finding defects across each form of testing, including ad-hoc testing. Defect detection efficiency is the percentage of discovered defects divided by the total number of issues – showing how effective the tests are.

2. Test coverage rate

An auxiliary function of ad-hoc testing is to increase coverage by checking components in a way that test cases don’t account for. This means the testers will also aim to radically increase test coverage across every check as much as they can.

3. Total test duration

Ad-hoc testing is much quicker than other quality assurance processes – and it’s essential that the testers work to maintain this advantage. Test duration metrics show team members how they can save time and compound the advantages of ad-hoc strategies even further.

4. Crash rate

These tests often aim to break the software and cause a crash or serious error – letting them go beyond typical test strategies and find unexpected issues. To this end, it can help to know how often the software crashes and what causes these problems.

5 Best Ad-Hoc Testing Tools

There are many free and paid testing tools available for ad-hoc testing in software testing – the best five are as follows:

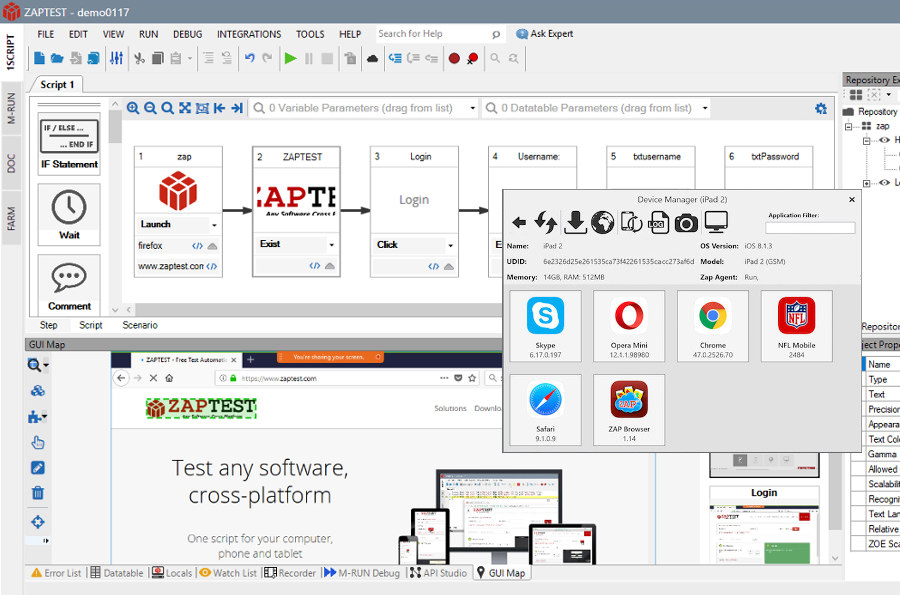

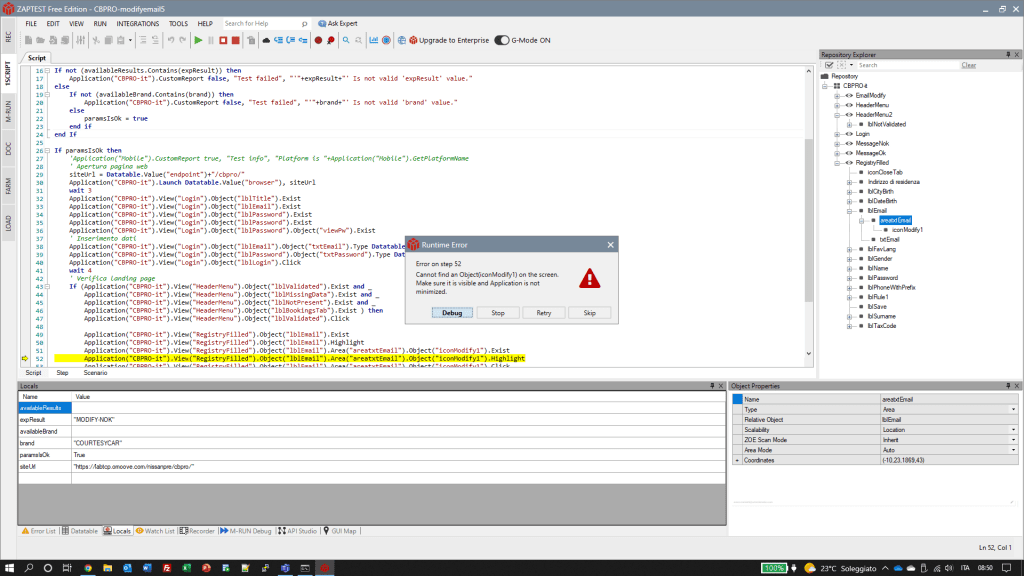

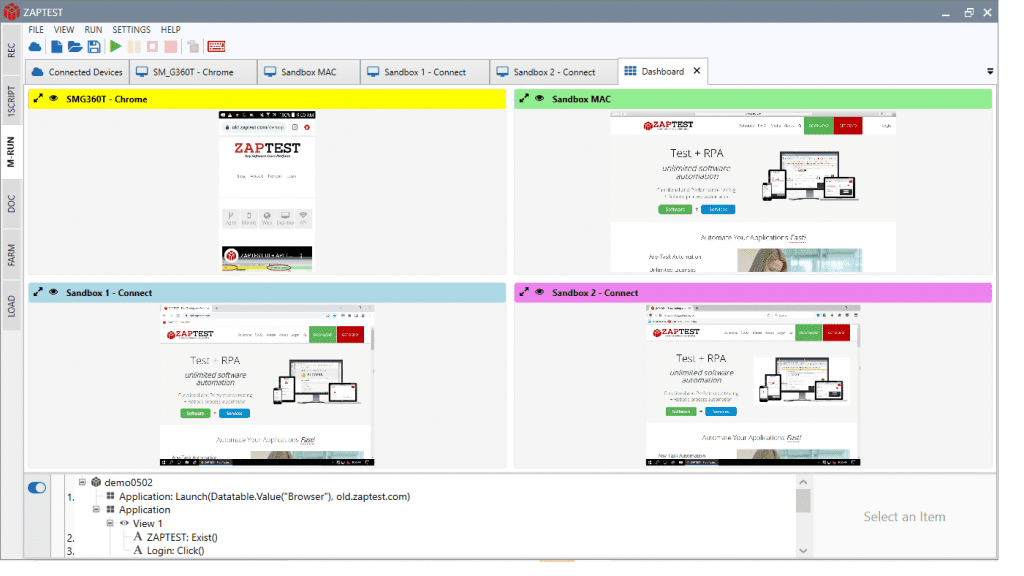

1. ZAPTEST Free & Enterprise Edition

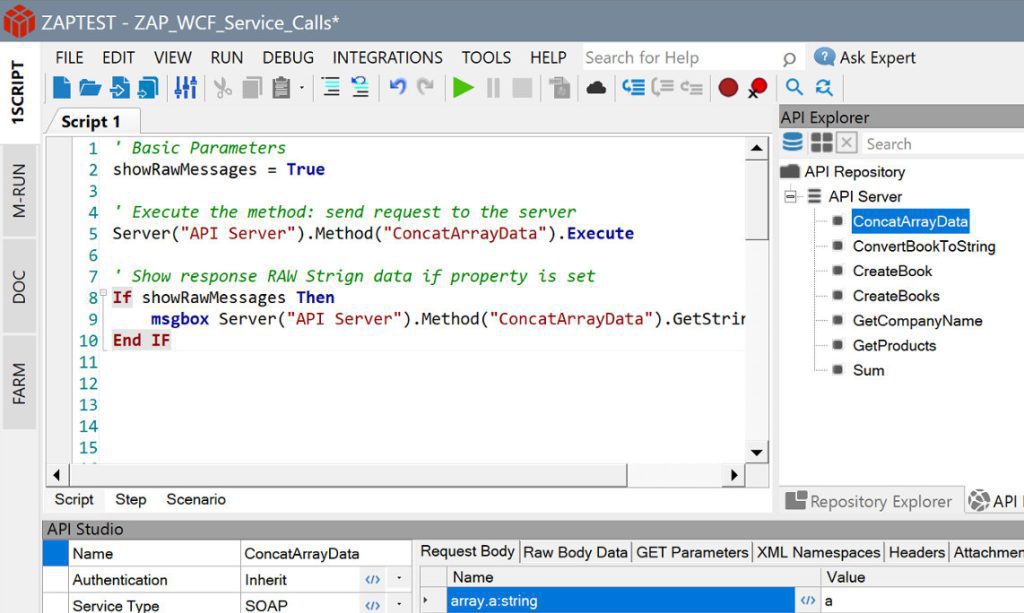

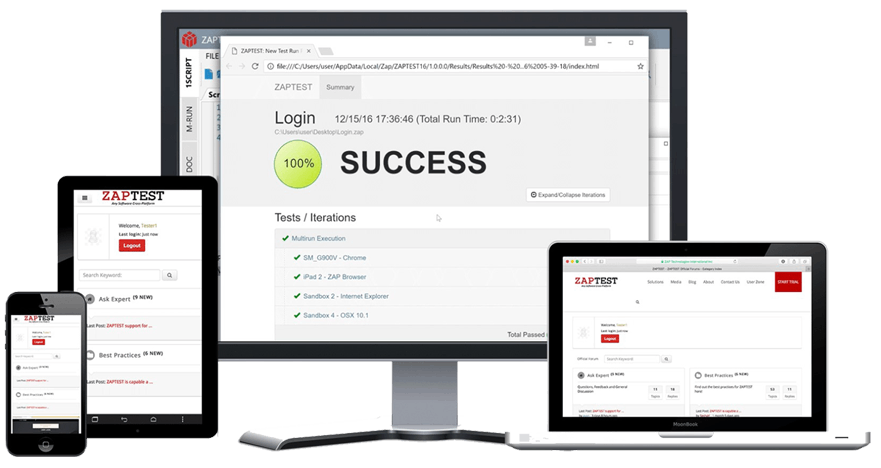

ZAPTEST is a comprehensive software testing program that provides a strong level of test + RPA functionality in both its free and enterprise versions.

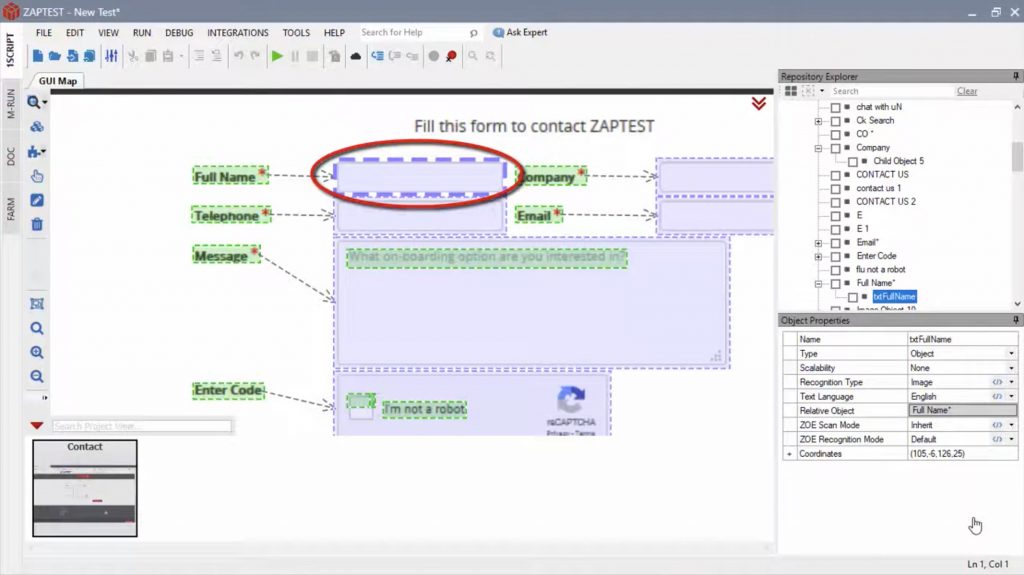

This full-stack software automation + RPA Suite allows for full testing on different desktop and mobile platforms; the software’s 1SCRIPT technology also lets users execute the same checks repeatedly with ease. On top of this, the tool leverages state of art computer vision, which makes it possible for ZAPTEST to run ad-hoc tests from a human perspective.

2. BrowserStack

BrowserStack is a cloud platform that can facilitate testing on over 3,000 diverse machines, with the additional feature of automating Selenium scripts. Though it provides strong coverage for software projects, it works best with browser and mobile applications.

BrowserStack testing solutions also include a free trial with 100 minutes of automated testing – though this might have limited use.

Though the cloud-based approach can be helpful, it also negatively affects the platform’s response time.

3. LambdaTest

LambdaTest similarly uses cloud-based technology and places a strong emphasis on browser testing which may limit its effectiveness for other applications – though it still meshes well with iOS and Android programs. This is a helpful platform when scalability is a concern and integrates with many other test hosting services.

However, some users have mixed reactions towards the application’s pricing across the various non-trial options that are available, potentially limiting accessibility for smaller organizations.

4. TestRail

TestRail is generally quite adaptable due to running entirely in-browser and, despite a strong focus on efficient test cases, also boasts direct ad-hoc functionality. The analytics it provides after every test can also help teams who actively avoid making their own independent documentation while still letting them validate their testing process.

Larger suites might struggle with its browser-based format, however, which can limit the time savings of ad-hoc testing by a significant margin.

5. Zephyr

Zephyr is a test management platform by SmartBear that helps quality assurance teams improve their testing visibility while also integrating well with other bug-tracking software.

However, this feature is limited to certain applications, with Confluence and Jira being the ones that benefit the most from Zephyr – these might not be the most effective solutions for every business. There are several scalable programs available under the Zephyr brand at different prices.

Ad-Hoc Testing checklist, tips & tricks

Here are additional tips for teams to account for when conducting ad-hoc testing:

1. Prioritise sensitive components

Some features or components are naturally more at risk of error than others, especially if they’re important for the program’s overall function.

Every approach to testing should identify the parts of an application that may benefit from more thorough attention. This is especially helpful when the overall time for testing is limited.

2. Investigate different testing tools

The tool that an organisation implements to facilitate its tests could affect the coverage and reliability of these checks.

With ad-hoc testing, it’s worth looking at as many programs as possible to find ones that suit its user-centric aspect. Software that uses computer vision technology, like ZAPTEST, can approach ad-hoc tests using a human-like strategy.

3. Adopt an ad-hoc mindset

Ad-hoc testing offers tremendous freedom throughout the quality assurance stage, but the team must commit to it to receive the strategy’s key benefits.

For example, ad-hoc testers should eschew all of their usual documents beyond basic note-taking and they need to inspect the software from an entirely new perspective.

4. Trust testing instincts

Experience with ad-hoc testing or general software checks can help highlight common points of failure and this helps testers determine how to spot errors of all types.

It’s vital that testers trust their instincts and always use this knowledge to their advantage – they can intuit which ad-hoc checks would be most helpful.

5. Fully record discovered bugs

Though ad-hoc testing has no formal documentation and mostly relies on informal notes, it’s still essential that the team is able to identify and communicate the cause of a software error.

They must log any information the test provides that’s relevant for developers, such as any potential causes of these issues.

6. Always account for the user

Every form of testing intends to accommodate the user’s overall experience to a degree – and ad-hoc testing is no exception. Though it often looks more deeply at the application’s inner workings and even its internal code, ad-hoc testers should try to break this software in ways that users theoretically could.

7. Continuously improve the process

Testing teams should refine their approach to ad-hoc testing between multiple iterations of the same software and from one project to the next.

They can collect feedback from developers to see how well their ad-hoc tests helped the quality assurance stage and if they were able to significantly increase test coverage.

Conclusion

Ad-hoc testing can help organizations of all kinds authenticate their software testing strategy but the way they implement this technique can be a significant factor in its effectiveness.

Balancing different testing types is the key to getting the most benefits from ad-hoc checks – especially as this form of testing intends to complement the others by filling a strategic gap.

With an application such as ZAPTEST, it’s possible for teams to conduct ad-hoc tests with greater confidence or flexibility, especially if they implement automation. No matter the team’s specific approach, their commitment to ad-hoc testing could revolutionize the entire program or project.